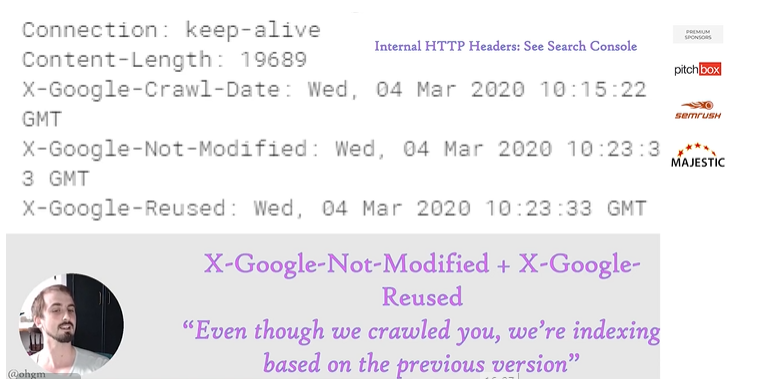

Brighton SEO October 2020 Notes

Last week we started off October by tuning into the world’s largest search conference BrightonSEO. Two days of inspiring talks allowed us to dive into several topics which were divided into categories. We offer a short wrap up of a few talks we attended.

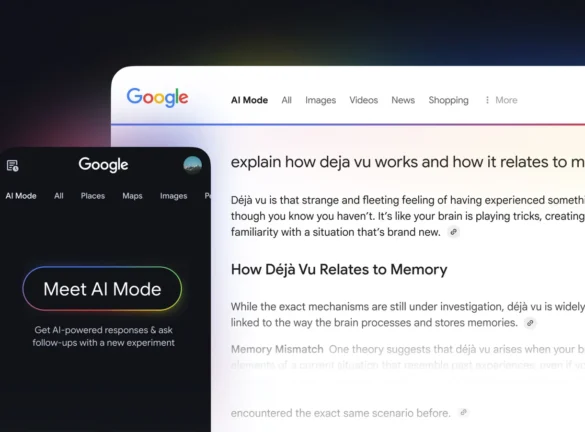

What I learned from analysing thousands of robots.txt files – Sam Gipson

Robots.txt files tell search engines, which pages on your site can or can’t be crawled and can impact search engine optimisation largely. It is important more than ever that robots.txt file is used correctly. The talk was focused on uncovering some interesting findings and common implementation mistakes. The surprising takeaway of this talk was that Google will ignore the user agent:* rules entirely if you have a specific :Googlebot section.

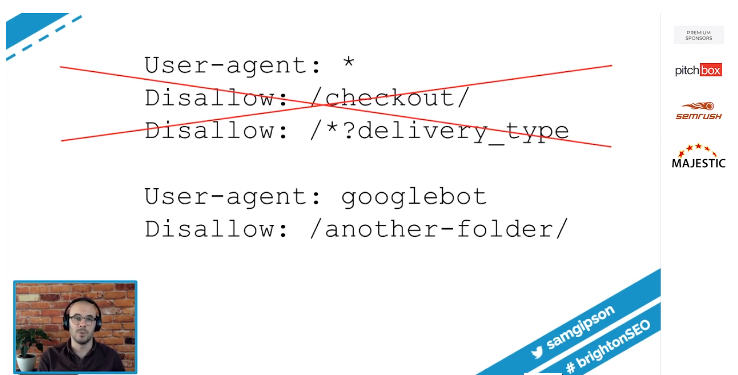

5 Reasons to love Fuzzy Lookup – Marco Bonomo

This talk gave us an understanding of how Excel Fuzzy Lookup works and how it can save a ton of time using this formula when dealing with tasks such as 404s redirects, site migration, keywords pruning and reduce some manual work. The key is to give it a go and practice.

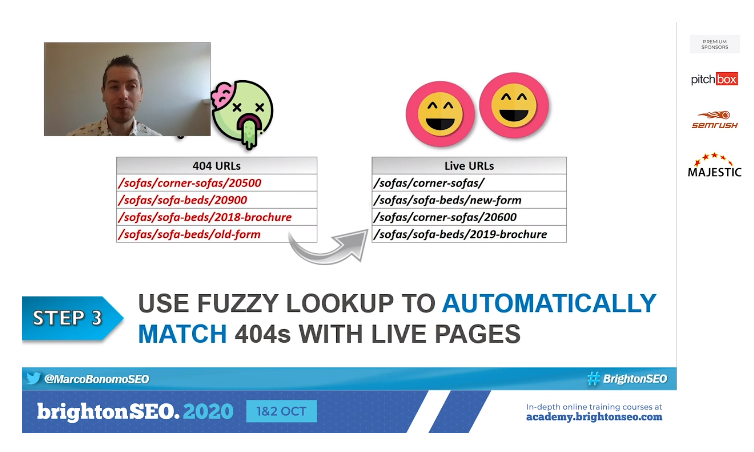

Esoteric SEO Tips I hope you don’t already know – Oliver H. G. Mason

The speaker presented some SEO tips based on what we are exposed to as SEOs such as facts, tests, problems, the articles we have read or information we have heard from other SEO professionals.

One of the takeaways: Internal Headers added to GSC crawls using the URL inspector shows if Google sees if you’ve modified a page, or they’re using a previous version.

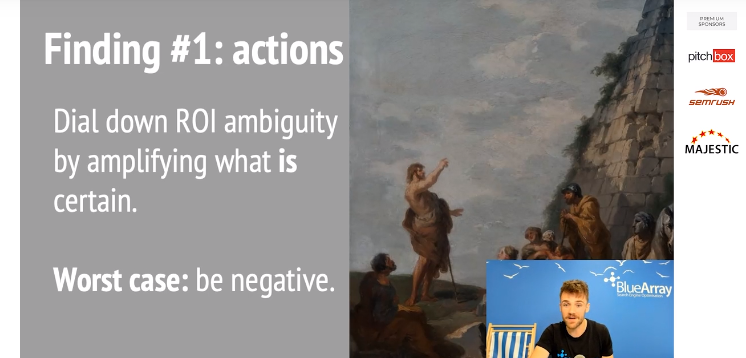

Why your SEO recommendations don’t get implemented, and what to do about it – Ben Howe

To solve the problem of SEO recommendations that don’t get implemented, the speaker surveyed 51 SEOs for their experiences of non-implementation and read around if any biases could be affecting decision making in SEO process. He then identified and shared in his presentation common non-implementation scenarios and offered some tips on what to do about it.

Takeaway: Something we can all do better at is framing recommendations in a more positive light.

A story of zero-click searches and SERP features – Kevin Indig

The speaker walked us through the story of zero click searches and SERP features.

Main points:

- Googles search revenue has started to stagnate

- Look into ly

- Invest in video answer snippets

- Image snippets ‘drain clicks’ aka improve CTR! Get RS Images!

The SERP Landscape – a Year in Review – Paige Hobart

Paige walked us through the SERP landscape and SERP Features trends and how they have changed over time.

Key takeaway: Because the search landscape is constantly changing, you need to know your vertical and its specific needs and threats.

How to maximise your site crawl efficiency- Miracle Inameti-Archibong

- Use your Robots.txt file wisely

- Break up your sitemap – getting more granular view what google is crawling

- Track sub-folders so you can identify areas of crawl waste – (Identifying errors in the sitemap easier)

- Keep your sitemap up to date

- Remove old sitemaps from the server

Recommendation for large site: cloud based crawlers: Ryte, Oncrawl, Deepcrawl

Factors affecting the number of URLs spider CAN crawl:

- Faceted navigation

- Dynamic generated pages

- Server capability – what errors you are getting

- Spider traps & loops

- Orphan pages

- JavaScript & Ajax links

Factors affecting the number of URLs spider WANT crawl:

- Content quality over content quantity

- Stale content – refreshing the old content

Log File contains a record of page requests for a website.

Log file uses:

- Identify important pages

- Discover areas of waste

- Find out what isn’t being crawled

Interested in learning more about SEO? Check our the industry-first SERP Feaures glossary by Paige Hobart